Large language models (LLMs) have taken the tech world by storm, and everyone is rushing to adapt them for their use cases. These adaptations can be in the form of directly using vanilla models, fine-tuning (using algorithms such as PEFT-LoRA), and Retrieval Augmented Generation (RAG).

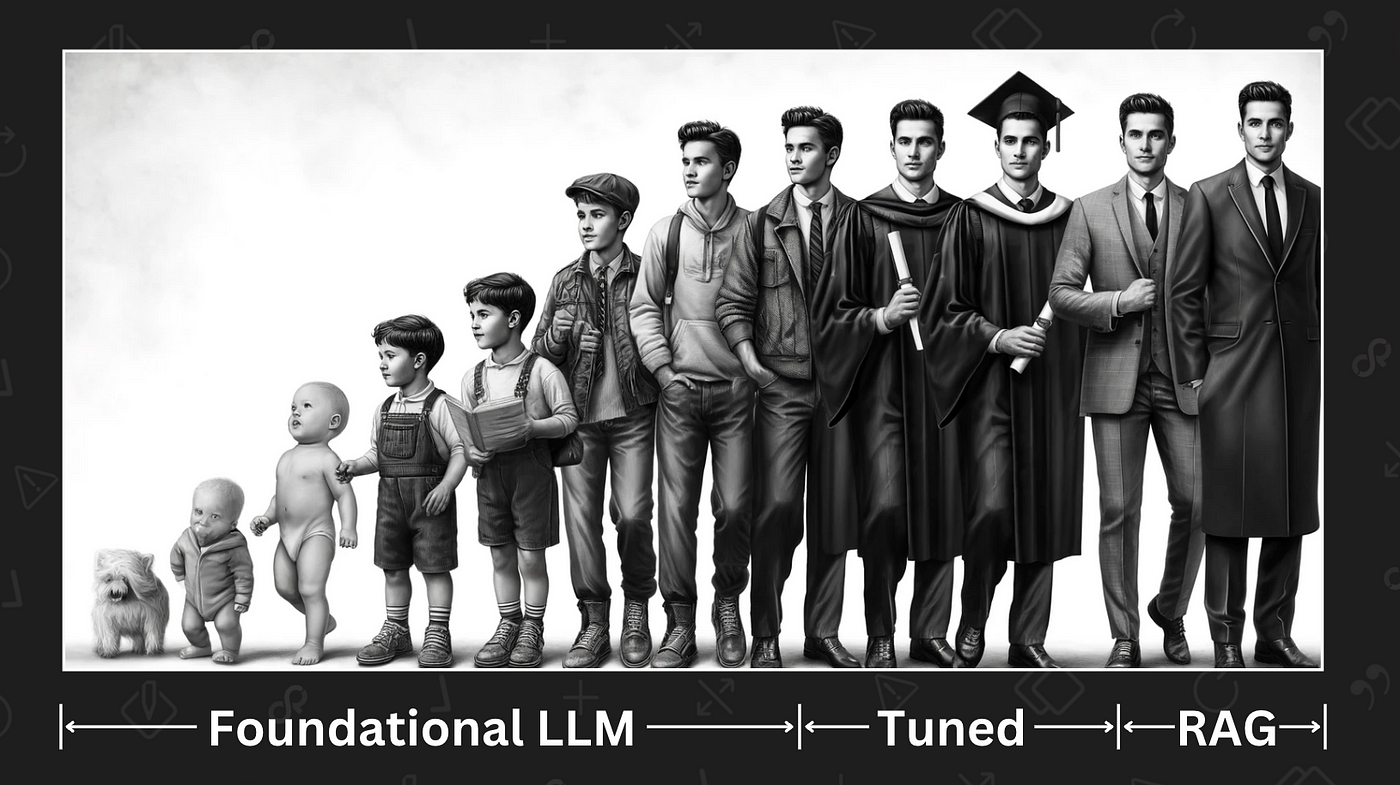

Consider LLMs as someone progressing through life’s educational and professional stages:

Phase 1 — Childhood/Adolescence (Foundational Models)

Like a student through to high school, foundational models (e.g., GPT, Llama, Gemini, etc.) possess a broad, general knowledge base covering a wide array of subjects from language to science. This stage represents the longest development phase, requiring substantial data to nurture the model’s reasoning capabilities. However, similar to a high schooler puzzled by complex quantum physics, these models might falter when faced with highly specialized queries, leading to inaccuracies or ‘hallucinations.’

Phase 2 — Higher Education (Fine-tuning)

Post-secondary education sharpens a person’s expertise in a chosen field. Similarly, LLMs can be fine-tuned to excel in specific domains (knowledge base fine-tuning) or tasks (instruction fine-tuning), building upon their foundational knowledge. This process is more focused and shorter than the initial training phase. For instance, a model fine-tuned on legal terminology might struggle to recall historical events but can discuss complex legal terms and their applications with ease.

Phase 3 — Professional Life (RAG)

Entering the workforce, an individual applies and further develops their specialized knowledge through real-world experience. For LLMs, this phase equates to applying RAG techniques. A model trained and fine-tuned with medical knowledge, when presented with patient symptoms (a.k.a. context in RAG), can leverage its comprehensive training (fine-tuning + foundational reasoning) to deliver accurate diagnoses, and cut down on hallucinations.

Keeping this analogy in mind, it can be relatively easier to reason about which technique or combination of them is the best for your particular use case, and also present a strong case for your decision to the stakeholders.