Blog

LLM Foundation

Large language models (LLMs) have taken the tech world by storm, and everyone is rushing to adapt them for their use cases. These adaptations can be in the form of directly using vanilla models, fine-tuning (using algorithms such as PEFT-LoRA), and Retrieval Augmented Generation (RAG).

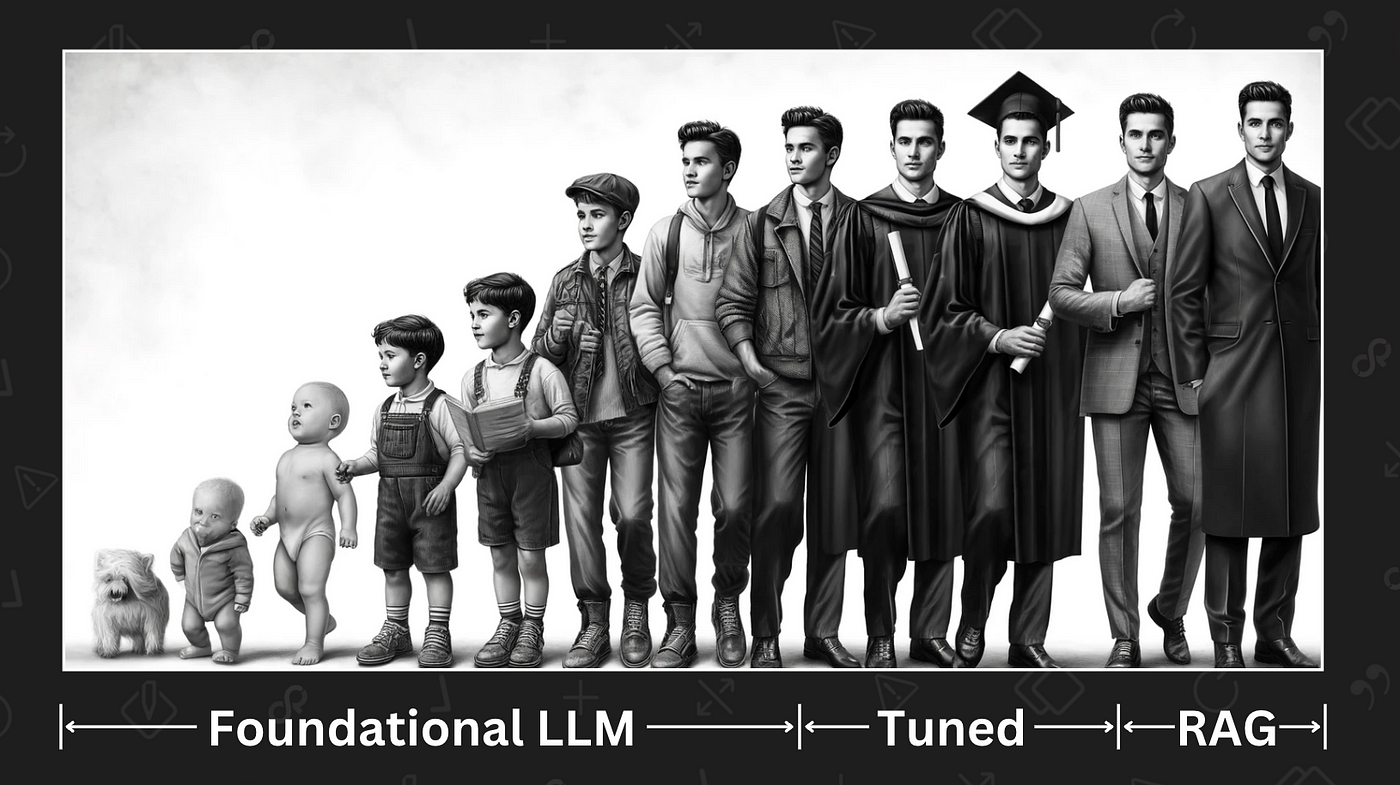

Consider LLMs as someone progressing through life’s educational and professional stages:

1. Phase 1 — 🎒 Childhood/Adolescence (Foundational Models) :

Like a student through to high school, foundational models (e.g., GPT, Llama, Gemini, etc.) possess a broad, general knowledge base covering a wide array of subjects from language to science. This stage represents the longest development phase, requiring substantial data to nurture the model’s reasoning capabilities. However, similar to a high schooler puzzled by complex quantum physics, these models might falter when faced with highly specialized queries, leading to inaccuracies or ‘hallucinations.’

2. Phase 2 — 🎓 Higher Education (Fine-tuning):

Post-secondary education sharpens a person’s expertise in a chosen field. Similarly, LLMs can be fine-tuned to excel in specific domains (knowledge base fine-tuning) or tasks (instruction fine-tuning), building upon their foundational knowledge. This process is more focused and shorter than the initial training phase. For instance, a model fine-tuned on legal terminology might struggle to recall historical events but can discuss complex legal terms and their applications with ease.

3. Phase 3 — 🏢 Professional Life (RAG):

Entering the workforce, an individual applies and further develops their specialized knowledge through real-world experience. For LLMs, this phase equates to applying RAG techniques. A model trained and fine-tuned with medical knowledge, when presented with patient symptoms (a.k.a. context in RAG), can leverage its comprehensive training (fine-tuning+foundational reasoning) to deliver accurate diagnoses, and cut down on hallucinations.

Keeping this analogy in mind, it can be relatively easier to reason about which technique or combination of them is the best for your particular usecase, and also present a strong case for your decision to the stakeholders.

Hope you enjoyed reading this article. Let me know your thoughts in the comments!

The Double-Edged Sword of Interoperability in Development

In the fast-paced realm of software development, the concept of interoperability serves as both a beacon of progress and a cautionary tale. This principle, which advocates for disparate systems and applications to work seamlessly together, holds the promise of a more integrated and efficient technological ecosystem. However, as with any significant shift in paradigms, it brings with it a set of challenges and concerns, particularly regarding the pace of innovation and the landscape of consumer choices.

The Promise of Interoperability

At its core, interoperability represents a utopian vision for the world of development. It breaks down silos, fostering an environment where software and hardware from different vendors can communicate and operate together without friction. This not only enhances user experience but also pushes the industry towards adopting universal standards. For developers, especially those working on the front-end of applications, interoperability means less time spent on ensuring compatibility across platforms and more focus on innovation and enhancing core functionalities.

Interoperability opens up avenues for more pro-consumer options in the long term. By standardizing how systems interact, consumers are no longer locked into a single ecosystem; they have the freedom to mix and match products and services that best meet their needs without concern for compatibility issues. This democratization of technology could lead to a more competitive market, driving down prices and pushing companies to innovate continuously to attract and retain consumers.

The Shadows of Interoperability

Despite its advantages, the transition towards interoperability is not without its pitfalls. One of the immediate concerns is the potential slowdown in the rate of innovation. As companies and developers navigate the complexities of making their products compatible with others, resources that could have been directed towards research and development may instead be consumed by the need for compliance with interoperability standards. This shift in focus might stifle creativity and delay the introduction of groundbreaking technologies.

Moreover, the drive for interoperability could inadvertently lead to a homogenization of technologies. With strict standards in place, there’s a risk that developers might prioritize compatibility over unique features, leading to a landscape where products become indistinguishable from one another. This could dampen the diversity of options available to consumers and, paradoxically, stifle competition.

The Long-Term Horizon

However, it’s essential to view these challenges as teething problems rather than insurmountable obstacles. The initial slowdown in innovation is a trade-off for a more integrated and consumer-friendly ecosystem in the future. As developers and companies adapt to interoperability standards, the process of ensuring compatibility will become more streamlined, allowing innovation to flourish once again.

Furthermore, the emphasis on interoperability does not preclude the development of unique features and technologies. Instead, it challenges developers to think creatively about how their innovations can coexist with and enhance the broader ecosystem. This could lead to a renaissance of sorts, where innovation is driven not just by the pursuit of novelty but by the desire to contribute to a more cohesive and user-centric technological landscape.

A Personal Touch on the Horizon

As we stand on the precipice of this new era, the new generation of developers, including myself, are indeed fortunate. We are entering a world of software development that, despite its challenges, is moving towards a horizon filled with hope for easier data transfer, more integrated systems, and the breaking down of walled gardens. This environment encourages us to develop technologies that bring people together, rather than confining them within isolated tech ecosystems. It’s a time of significant change, where our work as developers can significantly influence how society interacts with technology, making the digital world more accessible, inclusive, and interconnected than ever before.

Interoperability, therefore, is not just a technical goal; it’s a vision for a future where technology serves as a unifying force, empowering us to create solutions that reflect the diversity and interconnectedness of our world. For us, the new generation of developers, the journey towards this future is filled with both challenges and unprecedented opportunities to shape a world where technology truly belongs to everyone.

Cloud Systems: CloudSight optimizes cloud spending and usage

Optimoz’s Cloud Systems: CloudSight helps cloud services consumers to optimize their spending and cloud usage while leveraging on the cloud elasticity and devops. Visit https://cloudsystems.com to learn more about our solutions. It also provides a powerful solution to cloud services resellers for managing their customers’ invoicing and billing.

“It’s exciting to have Cloud Systems: CloudSight in our portfolio of services at Optimoz” said Naresh Patel, President and CEO of Optimoz.

Optimoz is an expert in cloud engineering; from standard to complex and everywhere in-between. The company exceeds clients’ expectations even for the most complicated requests. The team utilizes proven technical skills, lean/agile methodologies and project management processes that provide the best cloud solution and optimize clients’ success in the Cloud.

API Test Automation

In a nutshell, an API is a set of functions that allows the sharing of data between independently run applications. Over the past 24 months, more enterprises have begun to modernize their applications by adopting an API (Application Programming Interface) first strategy. This represents a major move away from traditional SOAP-based APIs to RESTFul APIs. This strategy has increased the demand and complexity for the test automation.

Automated API Testing – Essential for Cloud Migration & Modernization

Automating API testing is essential for functional, load, regression, and integration testing. API automation streamlines integration and regression testing every time a new change is made. It allows the testing of business functions separately from the user interface. API test automation also reduces the turnaround time in the software development release cycle, feedback, fixes, and redeployments, which makes applications more reliable and successful. In addition, OpenAPI specifications and API test automation with the use of generated mock data makes it easier to discover any integrations issue sooner in the lifecycle of the API development. (more…)

Rethinking API Governance with Deloitte, OPTIMOZ and USCIS

Deloitte, OPTIMOZ and USCIS discuss the patterns and anti-patterns for achieving the right-sized API governance approach for your organization from the show floor at Google Cloud Next ’19.

Our Guide to Choosing a Cloud Provider

By 2023, 80 percent of new application starts will be in the Cloud, and some exuberant analysts are predicting that the creation of new, on-premise data centers will end by 2025. In addition, enterprises are migrating existing workloads to the Cloud at a rapid pace. There are five, global leaders in Cloud services – Amazon AWS, Microsoft Azure, Google Cloud, IBM Cloud, Alibaba – and many other regional and national companies with credible offerings. Selecting the right Cloud provider can be challenging. Here are the things you should consider when selecting a Cloud services partner:

- What Is Your Migration + Modernization Strategy?

Most enterprises have multiple, important applications that they would like to move the Cloud. Moving them there can be tricky. We recommend performing a detailed application portfolio analysis and then creating an official Cloud strategy for the enterprise before embarking on any migration + modernization efforts. Your enterprise should answer the following questions: (more…)

The Six Secrets to a Successful Cloud Migration + Modernization

At Optimoz, we spend a lot of our time helping companies migrate their important applications to the Cloud. It’s harder than you might think. Most Cloud applications are built using technologies and tools that weren’t mainstream five years ago. Cloud platforms operate completely differently than in on-premise data centers. The cost model for delivering cloud applications is also completely different. Best-in-class methods for building, testing, deploying, and operating applications have advanced significantly, but most enterprises haven’t kept up. The need for skilled Cloud technologists far outstrips the supply. Keeping applications and data secure is getting more challenging.

So, if getting your applications running well in the Cloud is so hard, why do it? Well, if you do your job well, there are huge benefits: (more…)

Apigee – A Powerful, Next Generation API Management Platform

Driven by the power of the Cloud, the software development process – and development lifecycles – is transforming. Downtime is becoming a thing of the past with highly available architectures and immutable infrastructure. The scale of utilization is increasing as well. We see a large number of workloads being migrated to the Cloud, and, with a few notable exceptions, all new development is being sent to the Cloud.

New Methods Create New Challenges

Generally speaking, the new approach to software development is boon both to developers and business stakeholders; however, there are some interesting new challenges in three areas: (1) Embedding security in the development process, (2) properly leveraging and managing microservices, and (3) managing the APIs that govern the new approach to software. (more…)